How to Run Open source LLMs with a ChatGPT-Like Web Experience

Table of Contents

Welcome to the exciting world of accessible large language models (LLMs)! With the advent of tools like Ollama, deploying and running powerful LLMs locally has never been easier. In this blog, we will go through the steps to get started with Ollama, including installing the software and running the latest LLaMA 3.1 model. We’ll then take it a step further by deploying our LLM in a Docker container and using Open WebUI for an interactive web-based interface. This setup not only enhances the functionality and performance of the LLM but also provides a user-friendly environment for seamless interactions.

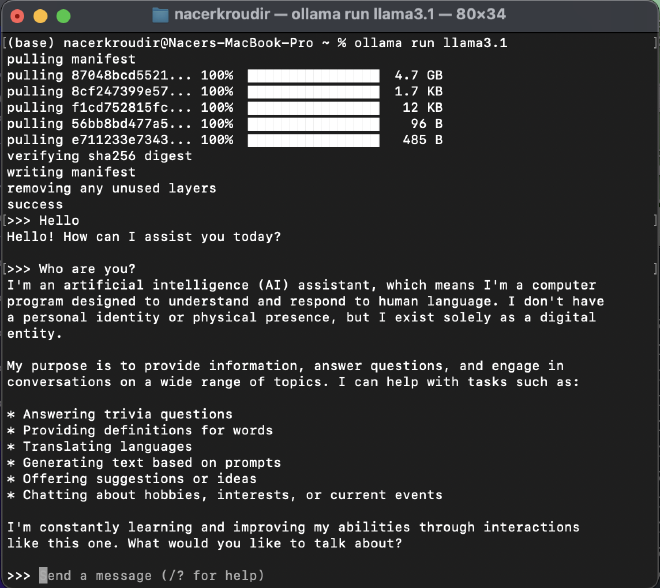

Download Ollama software and the LLM #

Ollama is an open-source project designed to simplify the process of running large language models (LLMs) locally. It supports various models, including LLaMA 3, Phi 3, Mistral, and Gemma 2, and is available for macOS, Linux, and Windows. Ollama’s key features include compatibility with the OpenAI Chat Completions API, which allows users to leverage existing OpenAI tooling with locally running models, and support for tool calling, enabling models to perform more complex tasks by interacting with external tools [1]. Once we have installed Ollama, we are just a step away from interacting with any powerful supported LLM. We will prompted to run this command on the terminal:

ollama run llama3

But we are going to try the latest version released as of (July 30, 2024) which is LLaMA 3.1. The new command is simply:

ollama run llama3.1

This command will download the LLaMA 3.1 model and initialize it. In no time, we will have a fully operational LLaMA 3.1 model right in our terminal, ready for us to interact with. By default, the 8 billion parameter model is downloaded, which is perfect for most applications. However, if you have the computational power and need more advanced capabilities, you can opt for the larger 70 billion or even the 405 billion parameter models.

But that’s just the beginning!

Elevate Your Experience with Open WebUI #

For a more robust and enjoyable experience, we can deploy our LLM in a Docker container and use the Open WebUI for an interactive web-based interface. Docker provides a powerful and consistent environment for running applications, ensuring that our models run smoothly and efficiently across different systems. It simplifies the setup process by encapsulating all the dependencies and configurations within a container, making it easier to deploy and manage the LLM. Open WebUI, on the other hand, offers a seamless, user-friendly interface akin to ChatGPT. This web-based interface enhances our interaction with the LLM by providing a clean and intuitive chat environment. Features like full Markdown and LaTeX support allow us to format responses richly, making the interaction more engaging and informative. Additionally, Open WebUI supports multi-modal interactions, enabling us to integrate text, pdf files and audio inputs seamlessly. With docker open and having the LLM downloaded locally, we use the following command to deploy Open WebUI with Ollama support on a docker container:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

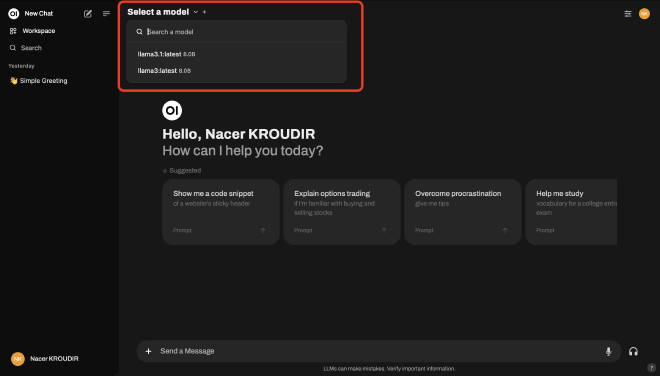

After executing the command, a new container is created and can be observed in the Docker interface. We can now access our WebUI by navigating to http://localhost:3000 in our browser. This link directs us to the fully functional and interactive web-based interface, allowing us to start utilizing our AI model immediately and even offline! We start by selecting the model on the top left

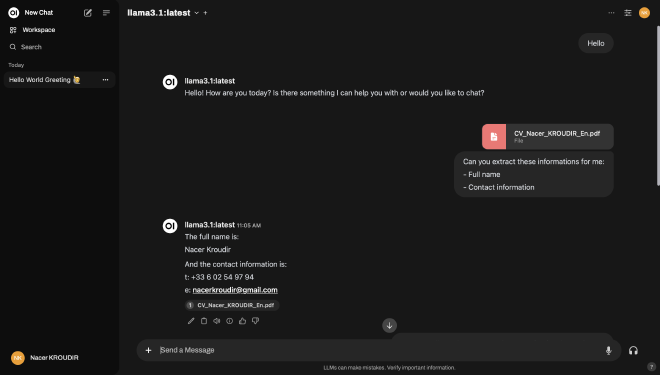

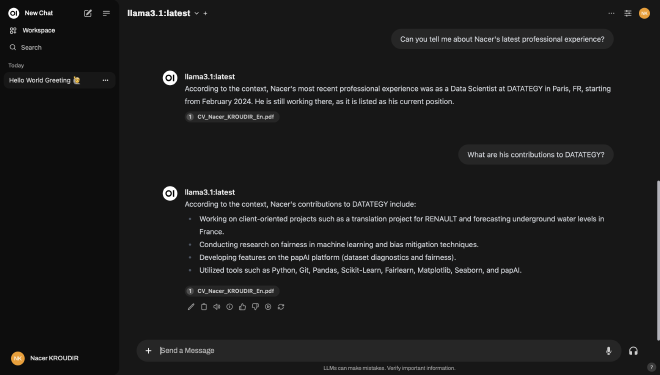

And Enjoy the chat! You can upload PDF files, and the model will be capable of answering questions based on the content of those files, providing you with a highly interactive and informative experience. A brief demonstration of the LLaMa 3.1 (8B version) capabilities can be seen in the following:

Reflections on Open-Source LLMs #

Open-source large language models (LLMs) are transforming the landscape of artificial intelligence and machine learning. These models, which include popular names like LLaMA 3, Mistral, and others supported by projects such as Ollama, offer a range of advantages that are fueling innovation and democratizing access to advanced AI technology. Let’s dive into a discussion about the key benefits of open-source LLMs and how they are shaping the future of AI.

- Accessibility and Democratization: Open-source LLMs make cutting-edge technology accessible to a broader audience. Researchers, developers, and enthusiasts can experiment with and build upon these models without the need for expensive licenses or proprietary software. This democratization fosters a more inclusive AI community where innovation can thrive at all levels.

- Transparency and Trust: Transparency is a fundamental principle of open-source projects. It allows users to inspect the code, understand the model architectures, and verify the training processes, thereby building trust. This openness ensures that there are no hidden biases or undesirable behaviors embedded within the models. However, it’s important to note that the training dataset for some models, such as LLaMA 3.1, is not fully disclosed. The LLaMA 3.1 paper mentions that their dataset is created from various sources containing knowledge up to the end of 2023, but it does not provide detailed information about these sources. This lack of clarity raises questions about the ethical collection of the data - a challenge that all LLM development groups face. While transparency in code and architecture is crucial, transparency in data sources is equally important to fully address trust and ethical considerations in AI development.

- Collaborative Improvement: The open-source community thrives on collaboration. Contributions from diverse groups of developers lead to rapid advancements and improvements. Bugs are identified and fixed more quickly, and new features and optimizations are continuously added, enhancing the overall performance and utility of the models.

- Customization and Flexibility: Open-source LLMs offer unparalleled flexibility. Users can customize models to suit their specific needs, whether it’s fine-tuning them for particular applications, integrating them with other tools, or even modifying the core architecture. This level of customization is often not possible with proprietary models.

- Cost Efficiency: Open-source models eliminate licensing fees, making advanced AI more affordable. This is particularly beneficial for startups, educational institutions, and non-profit organizations that might not have the resources to invest in costly AI solutions. I quote from Mark Zuckerberg’s letter: “Developers can run inference on Llama 3.1 405B on their own infra at roughly 50% the cost of using closed models like GPT-4o, for both user-facing and offline inference tasks.”

Given these advantages, it’s clear that open-source LLMs are not just a technical innovation but also a catalyst for broader societal benefits. They empower individuals and organizations to harness the power of AI in ways that were previously unimaginable. How do you think open-source LLMs will continue to shape the future of AI? What potential challenges and opportunities do you foresee in this rapidly evolving landscape?